Developing tests: introduction

Testing software is important, and can validate that things work as planned (or at all). Let's look at software testing in more details.

Disclaimer and caveat: I am by no means an expert in this field, and am still learning myself as I go. By writing this series I'm hoping to cement my knowledge and hopefully this information will be useful to other developers too.

I gave a talk titled Testing software with automated tests at codeHarbour on the 19th May 2022. The video of my talk is up on YouTube if you're interested. This blog post doesn't track exactly to the presentation, so if you've got time it's worth a look at both. Slides and notes from my talk can be found here.

When working with PHP I use the Codeception testing framework. I've not done any particular research into what framework is best, or compared features - I picked Codeception because it's what my coding partner is familiar with and I learn a lot from him. Codeception also handily integrates with Yii2 (the PHP framework I use). You can read more about Codeception on their site.

Why is this helpful?

Before issuing a new release of my projects (for example eVitabu) I run a number of tests. Initially I was doing these by hand, but the list grew quite long with tests to:

- Check I can log in

- Create and edit contributors

- Confirm resources can be created, edited

- Make and edit tags

- Post, edit and delete announcements

- Ensure static pages like the security policy and security acknowledgements are available

- ...

As you can see, even after combining several tests per bullet point that's over ten tests and I've not listed everything. More testing was needed as the application grew, so over time testing everything in a browser by hand was taking longer and longer. It's also worth remembering that, ideally, you need to perform every test again every time you change something. That might seem excessive, but it's easy to lose track of what part of your application depends on another - refactoring or removing a block of code can break areas you hadn't considered.

So, with the manual testing plan not scaling well: enter automated tests and Codeception.

Functional vs acceptance

Functional tests confirm the code you've written does what you intended it to do. For example, if your code creates a form with username and password boxes, a functional test would make sure both were present.

Acceptance tests confirm you've written what you were supposed to write. They're tied more to the specification that's agreed with your customer.

When to write tests

I'm a rebel and vary when I write my tests.

There’s a risk that if you write your tests after the code that you’ll run out of time and never write the tests. This is particularly true if you’re following a waterfall development model, which generally places testing towards the end of a project. It’s also possible to introduce bias in your tests: “I know the system works this way, so if I write my test like this it will pass”. You may not intend to write a biased test, but it could happen.

Writing tests before your code requires some form of specification to test against. Once the test is written the test suite should be run and your new tests should fail because there’s no code written for that feature yet. If the test passes then either you’ve written a bad test, or someone has already written the code (maybe a colleague, maybe you and you just forgot). Once the code is written, running the tests again should pass.

Finds security issues

In order to use pretty much every aspect of eVitabu a user has to be logged in, the only exceptions being login, registration, and viewing the privacy policy, terms of service and security policy details. To that end I have tests built to confirm that anonymous access, where no user is logged in, is not possible.

I wrote a batch of tests during dev week a few years ago, after being introduced to the concept by my coding partner. After running my first batch I noticed failures on some of the prevent anonymous... tests which indicated a user didn't need to be logged in. Sure enough, after browsing to the page I had the ability to make changes to some aspects of the system - an authentication bypass. Thanks to the test failing I was able to rectify the fault, maintaining the security of the system.

Test reports

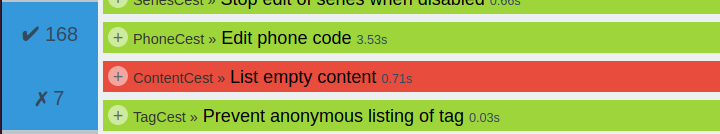

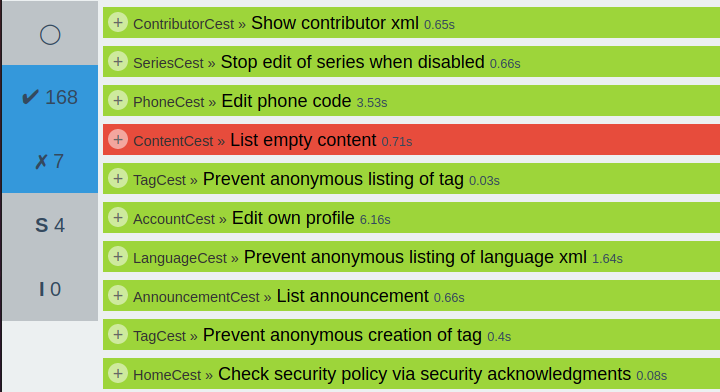

There's three ways that I use to see the result of my tests. Firstly, I tend to run my tests from a terminal, and Codeception outputs a summary of findings as it goes, explaining if a test passed, failed, or errored, before summing up at the end.

My IDE, PHPStorm, also supports Codeception, so it can show the result of the test while I'm working.

Finally, and my preferred, Codeception provides a handy HTML based report of findings. You can filter the results to show only the failures if preferred.

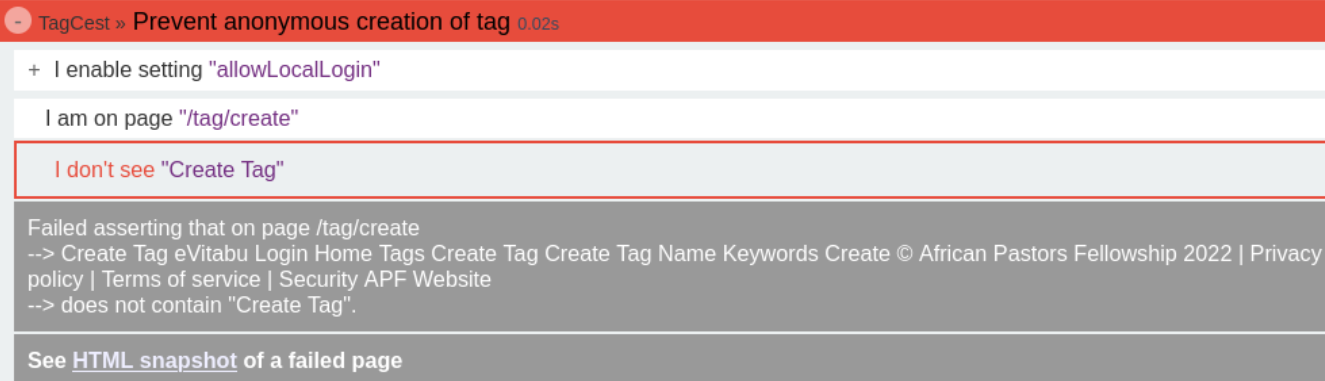

It's also possible to expand on the failed tests in order to see at what stage a test failed:

In the screenshot above, the first two steps (enabling a setting and moving to the tag creation page) passed. Step three - "I don’t see create tag" - failed, as that text was visible on the page. Clicking the link to the HTML snapshot lets us see what was on showing in the browser at that time - with no CSS or JavaScript (so it will look a bit ugly!).

Conclusion

Automated tests save you time and effort, so they're well worth investing some time in. There's probably a test framework (or a few) for your language of choice, so take some time to find the framework that you like for the language that you use.

Fixing bugs is always cheaper during the development phase rather than after the code is in production - this is particularly true if the problem is security related. Using automated testing can help to head these problems off at the pass.

It's also reassuring to know that changes you have just made to your project's source code haven't broken another area of your project. Running your tests before pushing changes to production is useful. Additionally you could use CI/CD to run your tests each time someone attempts to merge into a particular branch, helping to catch problems early.

Banner image: Cropped screenshot showing some passing and one failing test.