Business continuity - testing your disaster plan

Testing your business continuity plan is a worthwhile exercise. When did you last test yours?

Recently, one of the clients I work with held a two hour simulation to test their business continuity (BC) plans. Much like doing a test restore of your backup, it's worth testing your BC plan before you need to rely on it, so let's look at the results.

Firstly, let's start this off by saying I applaud the fact this client tested their plan. The organisation probably has a couple of hundred people on site and the simulation affected a large portion of them (not everyone could be affected as partner / outsourcing organisations share the same network) and no-one likes having their work day made more difficult. Nonetheless, the management team grabbed the bull by the horns and tested their plan - kudos.

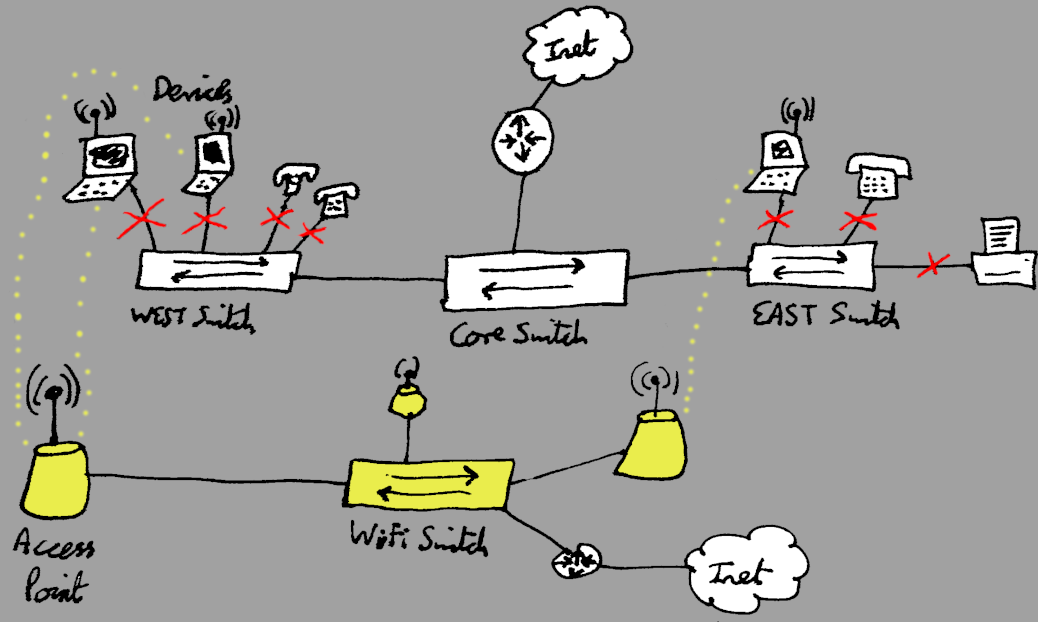

To describe the scenario: most users in the organisation work from laptops (either Windows or Chromebook) connected to the corporate, cabled, LAN. There's WiFi at the office that's fed by a separate Internet connection that also uses different switches. To simulate a network failure the main network switches had their ports shutdown, resulting in a "not connected" message on user's screens.

Following the disconnection the plan was for users to jump on the WiFi and either use their cloud based tools (GSuite, other SaaS[1] solutions) or connect in to the corporate data centre via the remote access gateways. As the simulation was only for a network outage at the user level, the data centre was still up.

Advanced notice

Communications were sent to the affected users letting them know what was going on. Admittedly in the event of an actual failure there wouldn't be advanced warning, but this did mean people could test they'd be able to work ahead of schedule. Our remote access gateways require two factor authentication and won't allow access without a token code, so advanced notice allowed people to request tokens in advance.

Sadly it seems some people either missed the advanced warning, misunderstood the implications or didn't pay attention, as users were phoning the service desk to request tokens up to ten minutes before the planned start of the outage.

Another option for people was to work from home on "outage day" although they'd still need to have already had a token and be familiar with the process.

Impact on the WiFi

I spent some time monitoring the WiFi and taking regular snapshots from the Unifi dashboard. At the beginning of the test there were approximately 352 devices connected to the WiFi (includes staff, guests, partners and multiple devices per person) so we'll consider that "normal". At peak only 389 devices were connected (up 37) which leads me to believe a number of devices were dual homed (on the cabled network and the WiFi). Alternatively people had connected ahead of time in readiness (unlikely given the scramble for tokens).

Across the estate the bulk of traffic seemed to be focused on five access points. That was surprising to me given the distribution of access points through the building and suggests a review of placements may be useful. Additionally, the majority of the connections were on the 2.4GHz range although not many of the access points have support for 5GHz as yet.

Overall, the top access points accounted for around 6.8GB of traffic during the two hour test.

One department commented the WiFi was slow, however, it wasn't possible to obtain further data due to user availability.

Internet connection peak

A peak of around 27Mbps was noted on the Internet connection that feeds the WiFi. Looking at historical graphs we can see peaks of 35Mbps so the test's peak seems low. Bandwidth available to the Internet connection was greater than that so it appeared to cope.

The remote access gateways also showed they were well within tolerance.

Engaging with the exercise

Unfortunately not all of the staff engaged with the exercise. Some were overheard saying it was a great opportunity to clean their desks while others were noted to be sharpening all the pencils in the stationery cupboard. Hopefully those people are already familiar with remote working and know what to do, although their lack of engagement meant it wasn't a complete test.

Effect on ICT

In the hour before the start of the test the service desk took a number of calls asking for tokens to be provisioned. Ideally this would have been done ahead of "outage day" to reduce impact on support for other users, but I've already commented on preparations. During the outage there weren't many calls, but then we had disabled their IP phones!

Conclusions

Planning for a disaster, and testing your plan, can be the difference between a business going bust and a business continuing to offer a service during a disaster. In the case of this test the organisation should have been able to provide almost all of their services (phones would have been the complication) although I've not seen the final report on the test as yet.

When I was thinking about how I'd set up my own ICT business I considered disaster recovery and business continuity. My plan included having a second instance of my Customer Relationship Management system at a geographically different site, using cloud hosted email and telephony as well as having 4G data available at all times. Obviously as the number of users grows that has to be scaled accordingly.

Banner image, Asteroid and the city, from Openclipart.org, by Juhele

[1] SaaS - Software as a Service, an application that's hosted "in the cloud".

[2] Image by Ocrho, edited from Wikimedia Commons